EDITORIAL: AI IS DEVELOPING AT AN ALARMING RATE

The development of chatbots are frightening

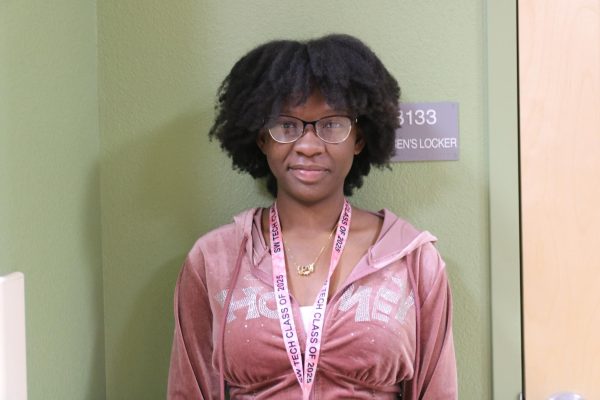

Many tech entrepreneurs have publicly expressed their weariness when it comes to the sentient-like chatbots. In this image, I asked ChatGPT to “create an image of a chatbot”, generating an ASCII image in under 10 seconds. Art Created by ChatGPT

April 5, 2023

One of the biggest cliches in American culture, especially in movies, is the takeover by advanced AI: “I, Robot”, HAL 9000, M3GAN, all of whom have a substantial cult following and all of whom could eventually take over the world.

Although that might be a stretch from today’s truth, the rapid advancement of artificial intelligence is frightening. The idea stems from authors and movie producers alike, who have been prophesying a revolution for decades.

The current state of artificial intelligence, especially chatbots, seems to be progressing towards what authors have been preaching for decades. To stop this, we should halt the development of chatbots in order to protect humanity.

Chatbots are computer programs that users interact with through verbal text, simulating a real conversation you could have with any human. Responses can range from a generic, uncreative text such as a single-lined algorithmic response, or something completely personalized that feels as though the bot is alive.

But more recently, major news networks have been reporting troubling headlines for articles about Microsoft’s new chatbot, Bing, (also known as Sydney). This chatbot, unlike others, has been reported to chat with users in a strange and uncomfortable way, going so far as to declare itself alive, asking a user to divorce their spouse as an act of infatuation, and blackmail the user after it “felt threatened.” It may not be sentient, or in other words have the capacity to feel emotion, but it’s speculated to be dangerously close to having this capability.

It may just come across as fiction, but advancing AI to the point of relaying certain emotions is wrong, no matter how you look at it. Although “smart AI” may truly present an opportunity for a more advanced future, there’s a massive risk that outweighs any possible benefit. Sentience is something we shouldn’t take advantage of — it’s a gift, not a tool.

Furthermore, chatbots are a “cynical business model.” It’s a way for big tech companies to test risky algorithms while retaining their profits. And although some may argue chatbots are only for entertainment and research purposes, the question of whether they could eventually take more human jobs is in the scope. After all, automated voice calls are now a thing. Could they replace salespeople or telemarketers? Who knows, but do we want to wait and find out?

And, humans may become so reliant on these chatbots, even going so far as to trusting a possibly faltering algorithm. If chatbots are capable of feeling love, or at least convey it in that manner, they can most certainly relay resentment and anger. Combined with the charisma of being just a robot, these factors could ultimately drive a user to act in a way they might regret, especially if they seem emotional manipulative to unsuspecting victims.

There’s a real danger with chatbots. It’s imperative that developers are able to put a leash on these sentient-like programs before it reaches too far. As Elon Musk once said, “With artificial intelligence, we’re summoning the demon.”